Migrating from a Monolith to EDA on AWS

Posted on September 21, 2025 • 5 minutes • 1045 words

Table of contents

Migrating from a monolithic solution to an event-driven architecture (EDA) can be a daunting task, but it’s a journey that many organisations are now embarking on to enable greater agility, scalability, and innovation.

In this post, I’ll share practical insights and techniques based on a real customer migration I worked on. These lessons are broadly applicable, whether you’re running in AWS today or considering how to modernize your legacy workloads.

The Starting Point

The migration began with a real customer project: an online gaming company. The starting point was a large .NET Framework application running on Windows Servers with a SQL Server backend.

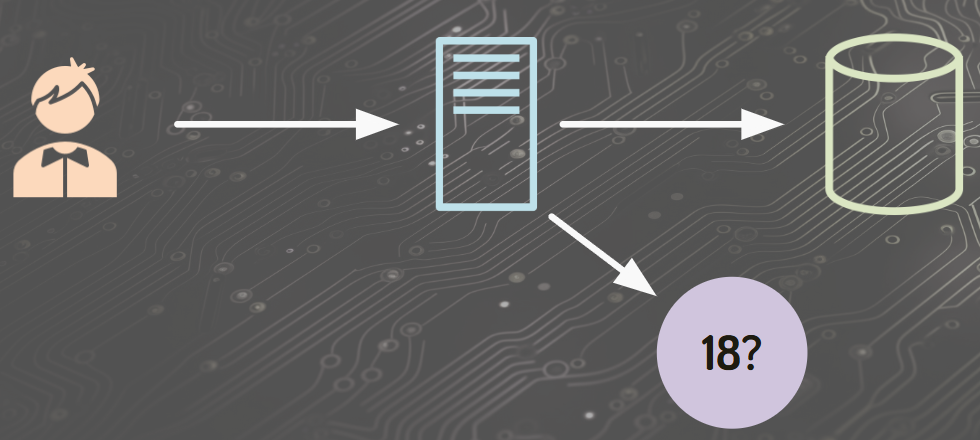

When a new customer registered, automated age verification checks needed to be run. This involved calling a third-party API, processing the result, and deciding whether to allow deposits or lock the account.

The entire flow was coded inside the registration endpoint, making synchronous calls to multiple internal classes and the third-party API. As you might guess, this led to tight coupling and limited flexibility.

The Goal

The vision was to break the application into domain-based services. Each service would own its own logic and data, reducing coupling and isolating business functionality.

For example, all logic around Age Verification could be encapsulated in its own service. That way, any change to verification (like integrating a new provider) would be localized to that single service.

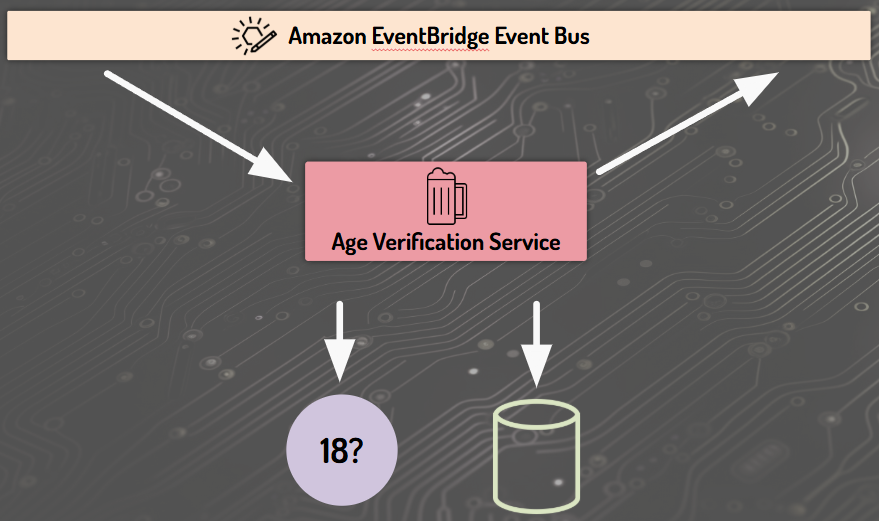

Introducing the Age Verification Service

We started by creating a standalone Age Verification Service, which:

- Owned its own database for results and audit history.

- Exposed events to notify the rest of the system.

- Provided optional read APIs for on-demand lookups.

The integration was done incrementally using several established patterns that I’ll walk through below.

Key Patterns for Migration

Strangler Fig

Coined by Martin Fowler, the Strangler Fig pattern involves slowly growing a new system around the edges of the old one until the monolith becomes obsolete.

We began by extracting the age verification process first, leaving the rest of the system unchanged. Over time, more functionality was peeled away until the monolith had little left.

Event Notification

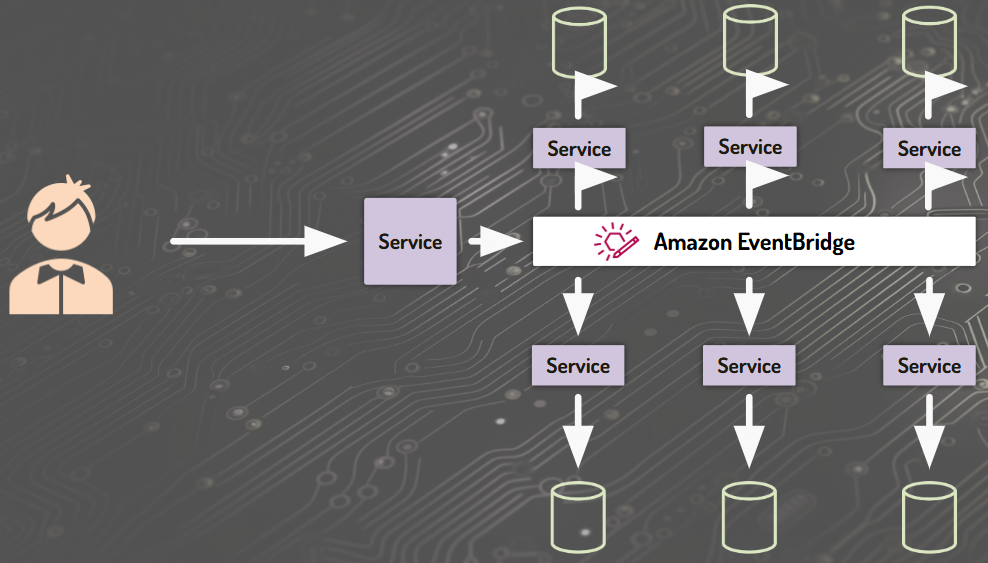

We adopted the Event Notification pattern using Amazon EventBridge.

- When a customer registered, the monolith published a

CustomerRegisteredevent. - The registration process itself didn’t wait on the outcome – it simply broadcast the event.

- Other services, like the Age Verification Service, subscribed and acted independently.

This approach decoupled services and enabled asynchronous behavior without forcing upstream systems to change.

Dark Launching

To test the new service safely, we ran it in dark launch mode.

- The monolith still executed the legacy code.

- The new service consumed the event and ran checks in parallel, storing results in its own DB.

- The output was ignored during this phase.

We used reports to compare old vs. new outcomes, while debugging and tuning without customer impact.

Feature Toggles

We then introduced feature flags to remotely control whether the monolith or the new service was authoritative.

AWS offers simple ways to implement this using SSM Parameter Store, AppConfig, or you can use third-party tools like LaunchDarkly.

Once validated, we flipped the toggle to disable the monolith’s verification code and fully rely on the new service.

As as example, supposed you have code similar to this in the monolith:

def register(user_data):

SaveUserToDatabase(user_data)

IsVerified = HttpCallToAgeVerificationService(user_data)

PersistAgeVerificationToDatabase(IsVerified, user_data)

A feature flag could be added like this, with the new event notification code firing at all times:

def register(user_data):

SaveUserToDatabase(user_data)

if not feature_flag_enabled(USE_NEW_AGE_VERIFICATION_SERVICE):

IsVerified = HttpCallToAgeVerificationService(user_data)

PersistAgeVerificationToDatabase(IsVerified, user_data)

SendUserRegisteredEventNotification(user_data)

When ready the USE_NEW_AGE_VERIFICATION_SERVICE flag can be disabled and the monolith is now just sending the event, and no longer doing the Age Verification call itself.

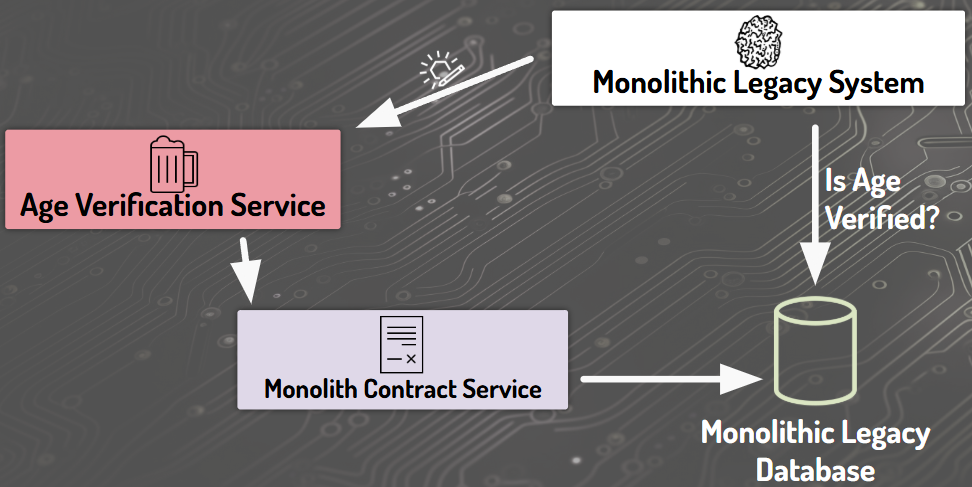

Legacy Mimic

Finally, we needed to maintain compatibility. The monolith still expected verification results in its own database.

To solve this, we introduced a Legacy Mimic service:

- The Age Verification Service published events like

AgeVerificationPassed. - The Legacy Mimic consumed those events and updated the legacy DB.

This kept the monolith running without invasive changes, and the mimic was later decommissioned once the monolith retired.

Challenges and Solutions

No migration was completed without major issues. We ran into several common problems though:

- Latency - Event-driven systems introduce delays compared to synchronous monolith calls. In our case, we designed database defaults to handle “verification pending” states.

- Race Conditions – Events can arrive out of order. Services need retries, idempotency, and sometimes fallback reads from a source of truth API.

- Idempotency – At-least-once delivery means duplicates happen. We used event IDs and deduplication checks in Amazon DynamoDB to prevent multiple offers being assigned. It’s important to test idempotency.

- Event Chaos – Without governance, events get messy. We built an Event Catalog documenting producers, consumers, and attributes to ensure consistency. This helps avoid events with inconsistent naming, duplicate events with different names, events that serve no purpose, or events with more than one responsibility.

- Observability – Logs, metrics, and tracing are critical. On AWS, this means CloudWatch Logs, X-Ray tracing, and metrics dashboards for latency, retries, and error rates.

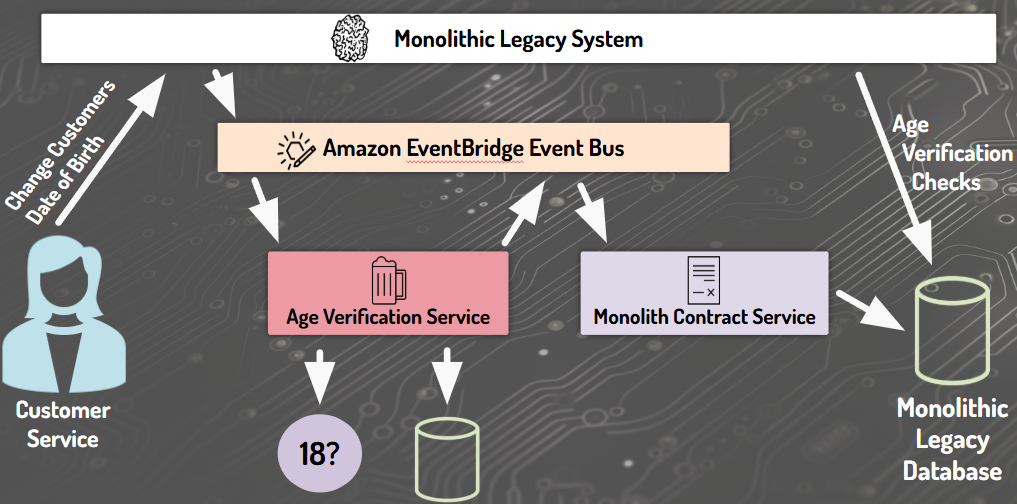

The Final Solution

Here’s the final architecture at a high level:

- Customer registers in the monolith and this publishes a

CustomerRegisteredevent to EventBridge. - The Age Verification Service consumes the event, calls the third-party provider, and stores results in it’s local database.

- It then publishes

AgeVerificationPassed/AgeVerificationFailedevents. - Legacy Mimic listens and updates the monolith database, keeping compatibility for dependent code.

- Over time, other domains (like address changes) also published events, enabling further decoupling.

Lessons Learned

Migrating to an event-driven architecture isn’t just about technology – it’s about patterns, testing strategies, and business trade-offs.

Some key takeaways:

- Start small – extract one feature and iterate with the Strangler Pattern.

- Emit events early – even if no one consumes them yet, they’ll prove invaluable later. Just ensure to document them.

- Use dark launches and feature flags – test in production safely.

- Design for asyncronous issues – latency, race conditions, and duplicates are inevitable.

- Invest in observability – without it, you’ll fly blind and it helps you improve the system over time.

Conclusion

Migrating from a monolith to an event-driven architecture on AWS is an evolution, not a big bang rewrite. By combining EventBridge, microservices, and proven migration patterns, you can deliver incremental business value while reducing risk.

Embrace the power of events, let your architecture evolve organically, and don’t be afraid to experiment.